Healthcare data grows daily by petabytes. With over a billion healthcare encounters a year in the United States alone, this wealth of data exceeds current structures for data governance and scope of secondary use. The past decade has successfully delivered data digitization and a reduction in paper records, but the next decade requires healthcare leaders to play catch up—we need to find the right tools to effectively govern clinical data in EHRs. Ultimately this will pay rich dividends in how we can improve both care quality and efficiency.

First, let’s talk about a problem we believe is the largest challenge for this generation’s health IT and informatics professionals: data quality. Medicine and nursing historically started as craft industries, where students learned under mentors with myriad ways to document for effective diagnosis and treatment. Documentation wasn’t consistent between institutions or even individual clinicians.

In the 1960s through the 1980s, standard documentation made inroads into medicine. Physicians began adopting SOAP notes to organize their reasoning about individual patients. Clinical flowsheets became widely adopted to streamline information for critically ill patients. An initial focus of data standardization also supported healthcare billing. And early on, pioneering clinicians began to think about how to use computers to organize medical information to support the clinical workflow, evolving into the electronic health record designs we see today.

What is data governance?

A set of practices to help organizations achieve engaged participation representing the interests of the entire organization for making critical decisions. It is needed to provide input into the organization’s implementation of external or regulatory requirements. Governance includes monitoring data quality to ensure that the organization successfully realizes its desired outcomes and receives business value from data management activities.

– Adapted from HealthIT.gov Playbook

EHR adoption was slow through the 2000s, characterized by implementation of disparate best-of-breed systems across departments and sites of care. Hospitals had emergency department systems, laboratory systems, pharmacy systems and specialty systems that were typically loosely integrated. Ambulatory care providers had a mishmash of processes to support documentation and electronic billing, but lacked basic capabilities expected of today’s electronic health records. Then in 2009, the American Reinvestment and Recovery Act setup a program to catapult EHRs forward called Meaningful Use.

This program brought electronic health records to well over 80% of ambulatory physicians and virtually every hospital in the United States.

Early studies on digital data quality within electronic health records revealed a long list of concerns including: incompleteness, duplication, inconsistent organization, fragmentation and an inadequate use of coded data within EHR workflows. The departmental applications worked well independently for the local needs, but not across clinician disciplines. This is largely because over 4 million U.S. practitioners document care in more than 100 certified electronic health records. And they all started using these EHRs at virtually the same time.

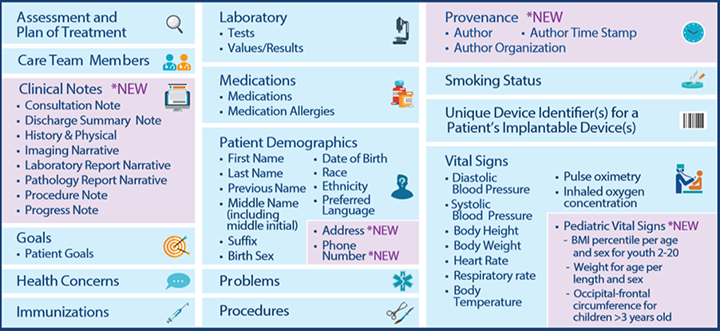

Beyond adoption, Meaningful Use also set minimum expectations for data standardization with electronic health records and other health IT systems. This led to the Interoperability Standards Advisory (ISA) and the U.S. Core Data for Interoperability (USCDI). The ISA sets preferred terminologies while the USCDI sets a minimal expectation of structured data used for care workflows within and between institutions.

The wave of EHR adoption from 2010 to 2020 successfully transitioned clinicians from paper to computers, and while there have been government efforts to support standardization of the data in these systems, no federal program could universally achieve high-quality data. It’s not a realistic expectation. Data governance is a local task that requires hands-on training, policies for caregivers and ancillary staff, a culture of accountability, and the right tools to monitor and improve data quality.

Establishing Data Quality Checks in the Real World

It’s no simple task, but in a jointly authored paper, we laid out 20 starter rules to examine real-world data quality. Some related to patient safety, while others were tied to making that data effective for decision support, population health and interoperability between systems. Here’s a sampling of three rules and why they are important:

1. Allergies Should Be Structured in the Medical Record

Domain: Patient Safety Real-World Context: About 80% of allergies in the medical record are recorded incorrectly, while 30% are just written words (no code or structure). Even fewer include the allergic reaction or severity.

Why Important? EHR drug-allergy alerts generally only work on structured data. If you prescribe ampicillin to a patient who has a “penicillin” allergy, it may go undetected if the system doesn’t have an appropriately structured allergy. The displays presented to the clinicians may be suboptimal and decision-support functionality is blocked with incomplete and low-quality data.

2. Quantitative Laboratory Results Should Have a Clearly Available Reference Range with Low or High Results Flagged Appropriately

Domain: Patient Safety & Data Quality

Real-World Context: Laboratory results often have different reference ranges from different labs, thereby obscuring the clinician comprehension of lab values relative to normal values.

Why Important? When clinicians miss low or high flags on a laboratory result, they may miss something critical to patient care. Having well-structured data helps prevent oversight, make clinicians more effective EHR users and allows data to be effectively integrated into downstream analytics and population health.

3. Medications Should Be Appropriately Encoded in RxNorm

Domain: Data Quality

Real-World Context: Even though Meaningful Use required medications to be recorded with RxNorm, many implementations omit structuring common medications in this vocabulary. This study demonstrated that only about 50% of medications were appropriately structured for purposes of monitoring care quality.

Why Important? Quality measurement, clinical decision support, population health and interoperability all benefit from standard medication data. Complex medication-based logic can fail in automated applications without high-quality data, often requiring the visual review of blank fields.

Steps Toward Better Data Governance for Your Organization

The above examples are illustrative and only a small part of building the right infrastructure for data governance within any organization, whether that be a health system, health information exchange or health plan. Higher quality data within electronic health records pays for itself through better patient care. It eases clinical workflow, improves decision support and enables accurate performance measurement. Moreover, it empowers cross-provider interoperability. Clinicians and analysts alike spend less time on data cleanup and more time on improving care quality and efficiency. The reality is that little or no resources are allotted to data cleanup, and the problems can persist and spread through growing data exchange.

There are many resources that can help, such as SAFER guidelines put out by ONC and various programs in health information management and informatics. Whatever you do to improve data quality and governance, it needs to be put in routine practice with real-world data. Doing a test, usually against test data samples, once every few years with sample data isn’t effective data governance—it’s just box checking.

A scalable healthcare data architecture to survey and monitor data quality and utilization regarding governance will require the integration of data from electronic health records and other systems to create a second tier of data. Eric Schmidt, former chief executive officer of Google and current chair of the U.S. Department of Defense’s Defense Innovation Board, laid out this formula for success: “The conclusion you come to, speaking as the computer scientist and not the doctor in the room, is that you need a second tier of data. The primary data stores are very important and will continue to be the EHRs in the medical system will be supplemented, not supplanted, with a second tier of data.”

Such a technical foundation will enable data governance to scale using the full extent of advanced technologies including expert rules engines, natural language processing, machine learning and artificial intelligence. It will also ensure that data are used for the right purposes, as well as respecting patient consent and privacy. A tiered architecture also better supports specialized applications such as clinical decision support and operational reporting.

The software that enables better governance will come from many sectors. Some will be open-source and free. Those are great places to start, but require technical skill and caution when applied to protected health information. Others come from a framework of private and not-for-profit organizations.

Data governance requires focus, energy and resources to succeed with the increasing scale of real-world, digital data. And it must exist locally within the institutions and regions where healthcare organizations operate. In order to move to shared patient care models, data must also be usable, consistent and shared across healthcare enterprises. Inadequate governance and low data quality create risk during care transition and limits the many benefits possible with electronic health records. There is no magic bullet for data governance and data quality, but there are meaningful steps that can be taken today along the road to better information.

The views and opinions expressed in this content or by commenters are those of the author and do not necessarily reflect the official policy or position of HIMSS or its affiliates.

Organizational Governance Resources

Get actionable insights into organizational governance including leadership, management and strategic planning from our subject matter experts.